Debrief of the event with Eric / Patrick (Team 1) and Kevin / Mark (Team 2).

Patrick and Eric started off with a low-probability tornado warning early on — just looking at tornado threats. Patrick would have issued a Tornado Warning arounf the time that the actual NWS Warning came out. He felt like issueing the pre-warning low-probabilities was very natural. Kevin says that this mirrors what happens in a real office as the forecasters discuss how confident they are feeling about issuing a warning.

The two teams did hand off one storm from one group to another around 2200-2215 UTC.

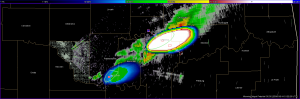

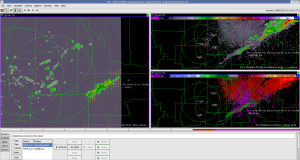

Playing back the tornado threat areas overlaid on the NWS Tornado Warnings: Eric believes that the grids make it easier to think in a storm-based mode. Kevin mentions that the NWS tornado warnings are probably for the entire storm (including hail / wind threat).

Note: 2257 there may have been a time error on the southern set of storms. Check data later.

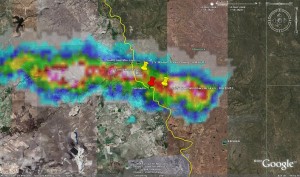

There is an interesting example around 0000 UTC of a threat area at the border of three CWAs. We are also noting differences in how big the NWS polygons are drawn from CWA to CWA.

Patrick notes that he would be OK with some level of automation — especially for hail threats, and especially if we are issuing warnings for different threat types. He wouldn’t want the algorithm issuing the warning, but would like the guidance that he could tweak and then issue.

Patrick notes that the low-probability threats they issued were based largely on the environmental conditions that the storms were developing in.

Eric thought that the workload was pretty heavy — he took over nine threats halfway through the event from Patrick. Patrick thought that the load was not too different than an event at the NWSFO.

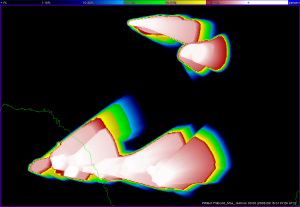

Kevin says that the warningContourSource should either (a) advect with your warning or (b) overlay an outline of your cone shape that shrinks with time, it would help with management. Too many circles, and it was hard to tell which belonged to which storm.

Mark would like to see the circles (initial threat areas) advect as well.

Eric let one expire by accident — he would like to see a situational awareness tool that draws the forecaters eye to the expiration.

Eric would like to see a way to group the threat areas together to change the direction on multiple areas at once.

Eric says that he really likes the ability to show low pre-warning probabilities, and Kevin agrees with this. It would be useful for downstream users. Eric likes that it lets the forecaster focus on the meteorology and science and divorce it from the policy.

Patrick thought that the knobology would be difficult to work with, but found that it didn’t seem too much different than working in the NWS office. Thought he was able to manage the same workload in WDSSII (with some experience) as in the NWSFO.

Travis Smith (EWP Backup Weekly Coordinator, 27-30 May)