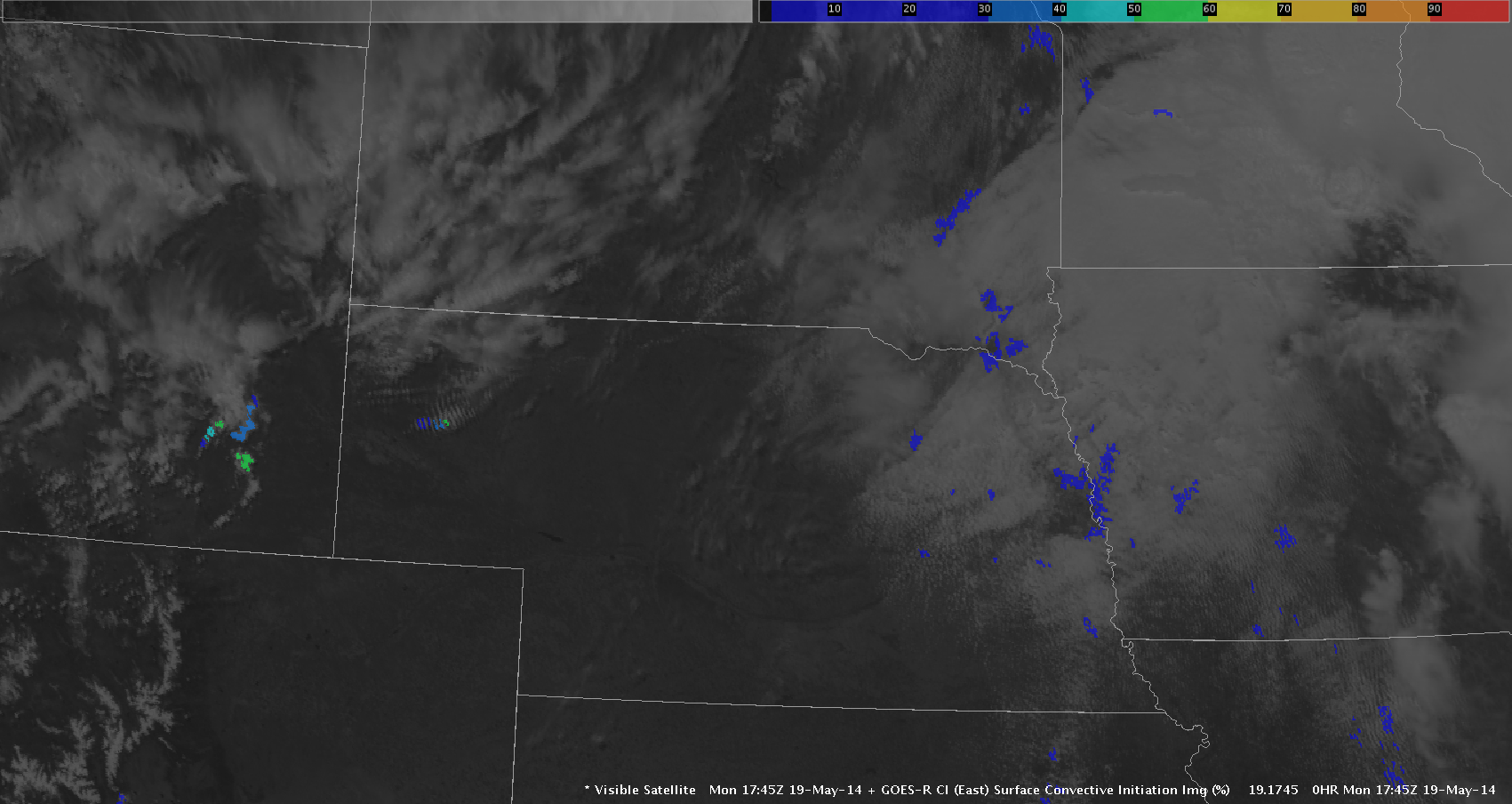

Monday 19 May 2014 begins the third week of our four-week spring experiment of the 2014 NSSL-NWS Experimental Warning Program (EWP2014) in the NOAA Hazardous Weather Testbed at the National Weather Center in Norman, OK. There will be two primary projects geared toward WFO applications, 1) a test of a Probabilistic Hazards Information (PHI) prototype, as part of the FACETS program and 2) an evaluation of multiple experimental products (formerly referred to as “The Spring Experiment”). The latter project – known as “The Big Experiment” – will have three components including a) an evaluation of multiple CONUS GOES-R convective applications, including satellite and lightning; b) an evaluation of the model performance and forecast utility of two convection-allowing models (the variational Local Analysis Prediction System and the Norman WRF); c) and an evaluation of a new feature tracking tool. We will also be coordinating with and evaluating the Experimental Forecast Program’s probabilistic severe weather outlooks as guidance for our warning operations. Operational activities will take place during the week Monday through Friday.

For the week of 19-23 May, our distinguished NWS guests will be Joshua Boustead (WFO Omaha, NE), Linda Gilbert (WFO Louisville, KY), Grant Hicks (WFO Glasgow, MT), Julie Malingowski (WFO Grand Junction, CO), and Trisha Palmer (WFO Peachtree City, GA). Additionally, we will be hosting a weather broadcaster to work with the NWS forecasters at the forecast desk. This week, our distinguished guest will be Danielle Vollmar of WCVB-TV (Boston, MA). If you see any of these folks walking around the building with a “NOAA Spring Experiment” visitor tag, please welcome them! The GOES-R program office, the NOAA Global Systems Divisions (GSD), and the National Severe Storms Laboratory have generously provided travel stipends for our participants from NWS forecast offices and television stations nationwide.

Visiting scientists this week will include Steve Albers (GSD), John Cintineo (Univ. of Wisconsin/CIMSS), Ashley Griffin (Univ. of Maryland), Chris Jewett (Univ. of Alabama – Huntsville), James McCormick (Air Force Weather Agency), Chris Schultz (Univ. of Alabama – Huntsville), and Bret Williams (Univ. of Alabama – Huntsville).

Darrel Kingfield will be the weekly coordinator. Lance VandenBoogart (WDTB) will be our “Tales from the Testbed” Webinar facilitator. Our support team also includes Kristin Calhoun, Gabe Garfield, Bill Line, Chris Karstens, Greg Stumpf, Karen Cooper, Vicki Farmer, Lans Rothfusz, Travis Smith, Aaron Anderson, and David Andra.

Here are several links of interest:

You can learn more about the EWP here:

https://hwt.nssl.noaa.gov/

NOAA employees can access the internal EWP2014 page with their LDAP credentials:

https://hwt.nssl.noaa.gov/ewp/internal/2014/

Gabe Garfield

CIMMS/NWS OUN

2014 EWP Operations Coordinator