In May 2008, we were given the opportunity to participate in NSSL’s Experimental Warning Program (EWP), which is part of the Hazardous Weather Testbed. It was held at the National Weather Center in Norman, Oklahoma for 6 weeks this year, running from April 28th to June 6th. This is the 2nd year of the EWP, and was born out of the Spring Program. The other component of the Hazardous Weather Testbed is the Experimental Forecast Program.

The purpose of the EWP is to evaluate new research and technology, and brings the researchers and developers into the same working environment as the forecasters. The goals of this year’s program were threefold:

- Evaluate the Phased Array Radar (PAR), located in Norman.

- Evaluate the 3 cm CASA radars in central Oklahoma.

- Evaluate gridded probabilistic warnings.

Before delving into any of the above 3 evaluations, we were given some training as well as time to practice with the software. During the evaluations, there was always help available as the learning curve was rather steep – especially for us Canadians who were unfamiliar with the Warning Decision Support System II (WDSSII) software. Above all, they wanted our feedback, as we were being “run†through the various implementations. Feedback was given to them both ongoing, and after the evaluation in a written survey. We will attempt to give a quick overview of each of our evaluations that we participated in.

Phased Array Radar: The PAR is being considered as a possible replacement for the WSR-88D, which is now 20 years old. The array consists of 4352 transmit/receive elements which form the array, as opposed to a large rotating antenna with one feedhorn. The radar beam is vertically polarized, as opposed to the horizontal polarization of the WSR-88D, the power is slightly reduced, thus features such as outflows and horizontal convective rolls are almost impossible to detect. Scans are available at one minute updates though, making storm evolution appear much more fluid. More information is available online at http://www.nssl.noaa.gov/projects/pardemo/ .

CASA Radar Network: There are four low power CASA (Collaborative Adaptive Sensing of the Atmosphere) radars to the southwest of Norman, filling the “gap†between two WSR-88D radars, namely Frederick, OK and Norman. The CASA radars are 3 cm wavelength, so although they suffer greatly from attenuation, having four of them in close proximity negates this problem in way of a composite image. The big advantage of the CASA radars is its high resolution, both spatially and temporally, with an added bonus of being able to collect data from as low as 200 metres above the ground. Additional information is available at these websites: http://www.casa.umass.edu/research/springexperiment.html and http://www.casa.umass.edu/

Experimental Gridded Probabilistic Warnings: Currently, the decision to warn a particular storm is subjective, and takes place when a forecaster has a certain degree of confidence (decision threshold crossed) that severe weather is occurring, or is likely to occur. There is no avenue available to explain how likely it is that severe weather is expected, other than in the discussion.

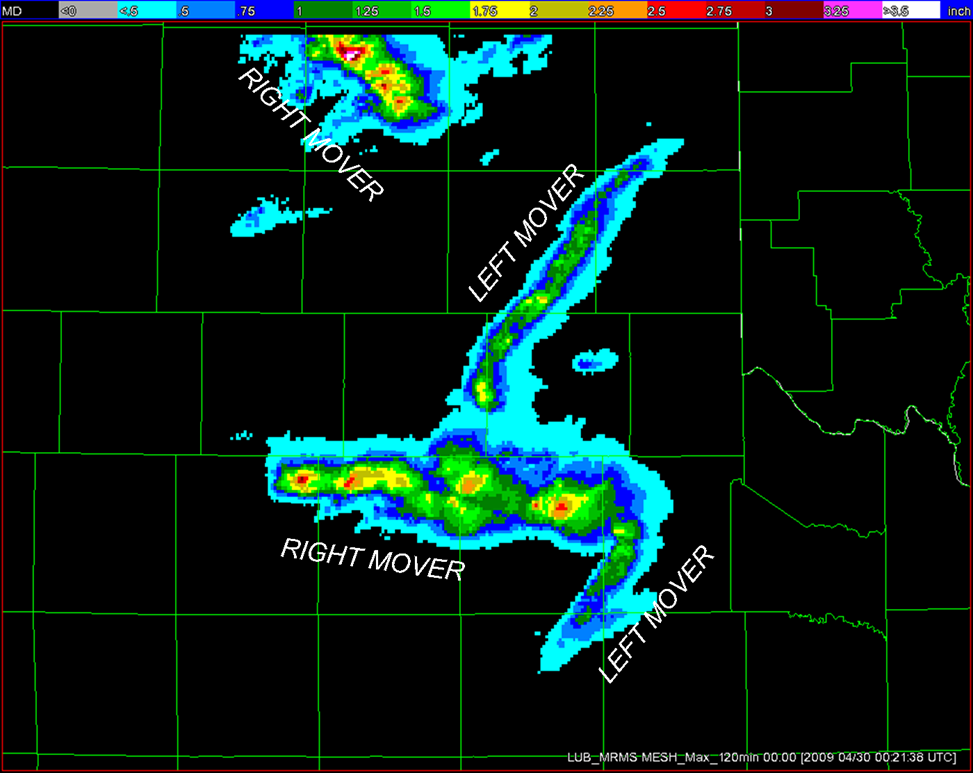

Recently the National Weather Service changed their warning areas from counties to polygons. You will notice while you are watching a radar animation that as a particular storm is tracking along, warning polygons will “jump†every 30 minutes or so to take into account new storm positions and expected motions. While storms appear to track along fairly smoothly, warning polygons do not. As a result, lead times for an approaching storm will differ from one locality to another, based on the warning issue time and the shape of the warning polygon.

Gridded probabilistic warnings (prob-warns) give forecasters the option to warn a storm before their mentally pre-defined threshold is crossed. Storms can be warned on as low as a 10% probability, all the way up to 100%. Storms are warned based on 3 categories: tornado, large hail, or damaging wind. In some supercells, there could be probability “cones†for all 3 simultaneously, each covering different areas depending on the specific threat.

Prob-warns are also given a velocity, thus they track along as smoothly as the storms being observed on a radar animation. Prob-warns can be assigned a changing threat level for storms that are expected to soon change in their intensity. Future versions will allow the warning “cones†to also depict curved probability paths.

The only drawback to prob-warns in the way they are currently set up (all experimentally of course), is they still don’t give you the option of tiered warnings. Even with prob-warn, we would still to a certain degree face the challenge of having more threats than bulletins (graphics) to express them. For example, a cold core tornado threat of 10% is considerably less threatening than an F5 tornado threat of 10%. Is it possible to design a “Tiered Gridded Probabilistic Warning System�

Mark Melsness, Environment Canada, Winnipeg and Kevin Brown, NWS, Norman discussing a severe Nebraska storm during a live probabilistic warning exercise.

Overall Impressions: The National Weather Center is an impressive facility located at the University of Oklahoma in Norman. It was completed in 2006 as a collaboration between NOAA and the University of Oklahoma. It is home to research scientists, operational meteorologists, faculty, students, engineers, and technicians. It is also the home of the SPC, NSSL and the Norman NWS.

We worked mainly in the Hazardous Weather Testbed (HWT), physically located between the SPC and the Norman NWS. The program ran for six consecutive weeks, with 3 or 4 operational forecasters present each week, coming from such diverse localities as Winnipeg, Toronto, Serbia, Fairbanks, Seattle and Norman to name a few. It was our input as operational forecasters that the researchers and developers wanted to tap into. We filled out several surveys, participated in daily debriefings, and also gave ongoing input to the HWT staff, who were most accommodating and professional.

The sheer scope of the forecasting challenge is much different in the United States than in Canada, especially in the Prairie and Arctic region where two to four severe weather meteorologists (two during the night and early morning hours) are responsible for issuing all warnings, watches, and special weather statements for half the land mass of Canada. These same two to four meteorologists monitor the nine radars which cover the populated portion of the Canadian Prairies – an area of comparable size, population density, and climate as 8 to 10 northern US NWS offices.

Having the ability to view minute by minute updates on both the CASA and Phased Array Radars was fascinating. Watching storms evolve in such a fluid motion was like the difference between watching a High Definition TV vs. an old black and white set. The main drawback for both radars was their inability to detect outflow boundaries and horizontal convective rolls. The amount of data to assimilate was huge, impossibly so when mentally translated into an Environment Canada office. The only way to incorporate this technology in a Canadian office would be to use an approach analogous to SCRIBE – quasi-automated warnings with the forecaster having the final say.

The scientists at the Hazardous Weather Testbed envision a time in the future, perhaps 10 to 15 years from now when warnings evolve from our current, “Warn on Detection†to “Warn on Forecast.†In other words, increased atmospheric monitoring coupled with an increased understanding of severe storms should allow us to issue warnings before a severe thunderstorm has even formed.

The Hazardous Weather Testbed is an excellent example of what could be accomplished here in Canada with a new National Laboratory dedicated to testing technology which could be used in Operations… with the goal of improving the forecasting and dissemination of severe weather.

We wish to thank our managers for allowing us to participate in this program, and the scientists at the National Weather Center for making our time there enjoyable and rewarding.

Bryan Tugwood (Environment Canada – Week 2 Participant)

Dave Patrick (Environment Canada – Week 4 Participant)

Mark Melsness (Environment Canada – Week 5 Participant)