Greetings from the off-season!

While SFE 2017 (!) is a ways off yet, preparations are already underway for many of the collaborators that provide products to the experiment. Development of the ensembles and guidance tested in the SFEs often occurs across a number of years, as tweaks suggested by prior experiments are implemented alongside new product development.

For example, in SFE 2015 four sets of tornado probabilities were evaluated. While all of the probabilities used 2-5 km updraft helicity (UH) from the NSSL-WRF ensemble, they differed in the environmental criteria used to filter the UH (i.e., if a simulated storm from a member was moving into an unfavorable environment, it was less likely to form a tornado and therefore the ensemble probabilities were lowered). These probabilities showed an overforecasting bias in the seasonally aggregated statistics, and the bias was consequential enough to be noted in subjective participant evaluations. The most typical rating for the probabilities was a 5 or 6 on a scale of 1-10, leaving much room for improvement.

To improve these tornado probabilities, a set of climatological tornado frequencies given a right-moving supercell and a significant tornado parameter (STP) value, as calculated by forecasters at the SPC, were brought to bear on the problem. The application of the climatological frequencies grounded the probabilities in reality. For example, in the prior probabilities if 6/10 ensemble members had a simulated storm passing over the same spot, the forecast probability would be 60%. The updated probabilities consider the magnitude of the STP in the environment the storm is moving into in each member. For example, if all of the storms were moving into an environment with an STP of 2.0, each member is assigned the climatological frequency of a storm to produce a tornado in that situation as the probability of a tornado. Then, the probabilities are averaged across each member. Assuming that 6/10 members now have the storm moving into an environment with STP = 2, the probability would be 60% * the climatological frequency of a tornado given STP = 2. This approach lowers the probabilities, and thus reduces overforecasting.

The new set of probabilities will be tested in SFE 2017. However, these probabilities have been worked on for over a year, and are already available daily on the NSSL-WRF ensemble’s website.

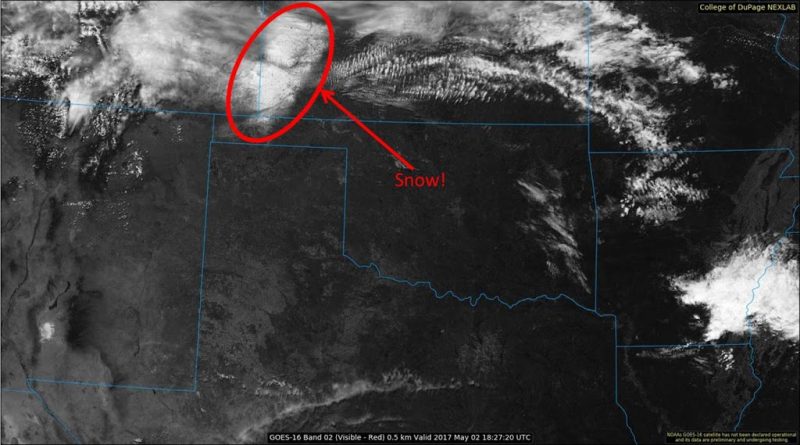

While the statistics for all of the tornado probabilities discussed herein were aggregated over the peak of tornado season (i.e., April-June), the end of November 2016 brought tornadoes to the southeastern United States, and with them, the chance to test the new probabilities. We’ll focus specifically on 29 November 2016, a day that saw 44 filtered tornado local storm reports (LSRs):

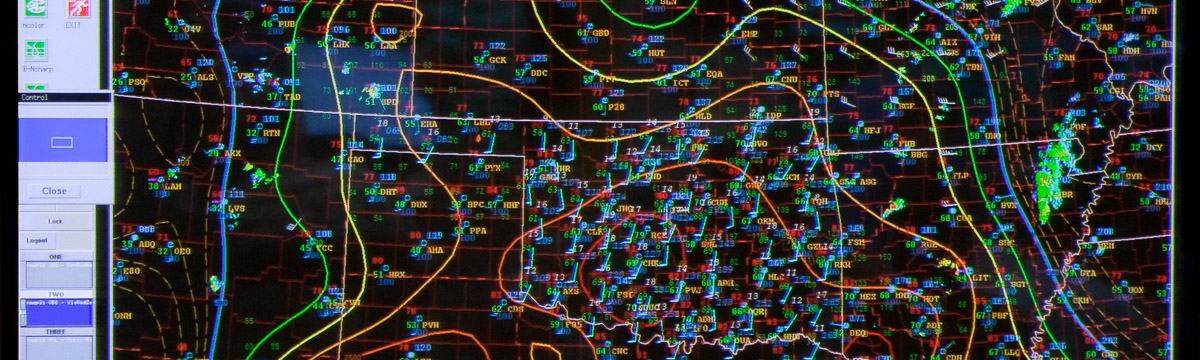

The Storm Prediction Center had a good handle on this scenario, showcasing the potential for severe weather across some of the affected region four days in advance. At 0600 UTC on the day of the event, their “enhanced” area covered much of the hardest-hit areas, with the axis of the outlook a bit skewed from the axis of the LSRs. The outlook and LSRs are shown below.

The 0600 UTC outlook is shown here, because that is when the probabilities computed above become available – our hope is that someday forecasters can look at these probabilities as a “first-guess”, encompassing multiple severe storm parameters from the ensemble into one graphic. The SPC’s probabilistic tornado forecast from 0600 UTC encompassed all of the tornado reports, but was a bit too far west initially. Ideally, the ensemble tornado forecasts would resemble the SPC’s forecast:

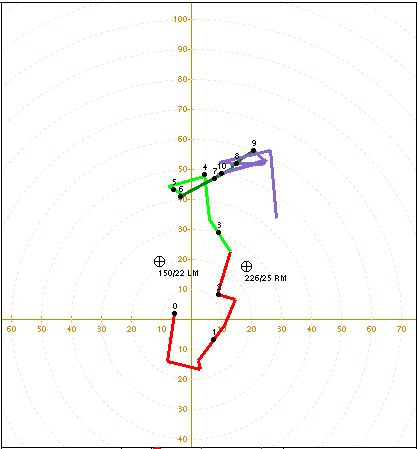

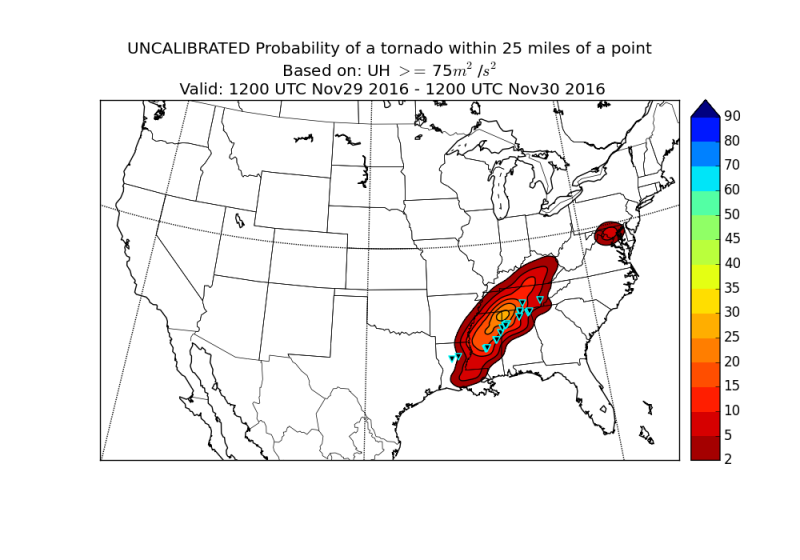

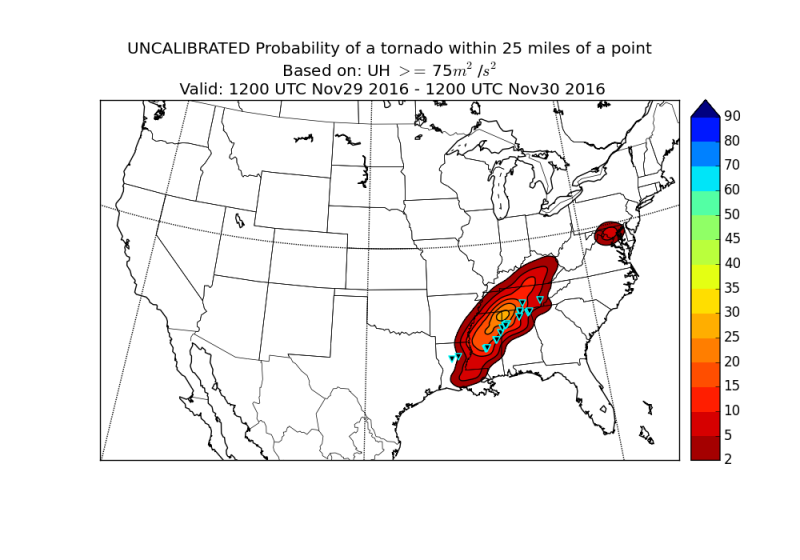

When we consider the UH-based probabilities, there’s a pocket of high probabilities, between 25-30%, in an area that is close to the highest density of tornado reports. Additionally, all of the reports are not encompassed by the probabilities, and there is an extraneous blob of 5% risk over the DC/Maryland area. The 10% corridor of the probabilities extends further north than the SPC’s, but overall, this was a decent forecast, if a bit high in that “bulls-eye” of probabilities.

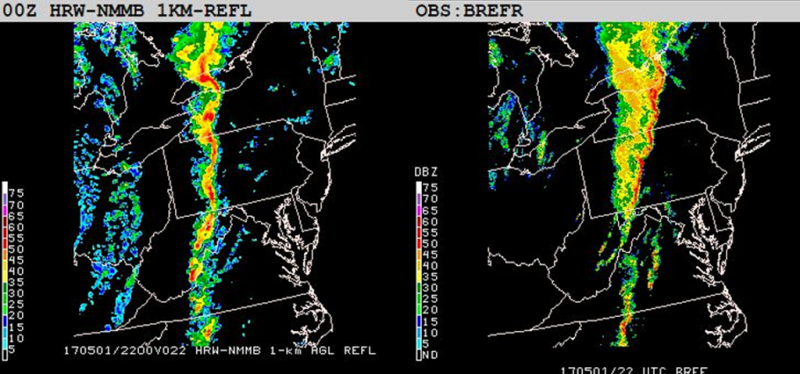

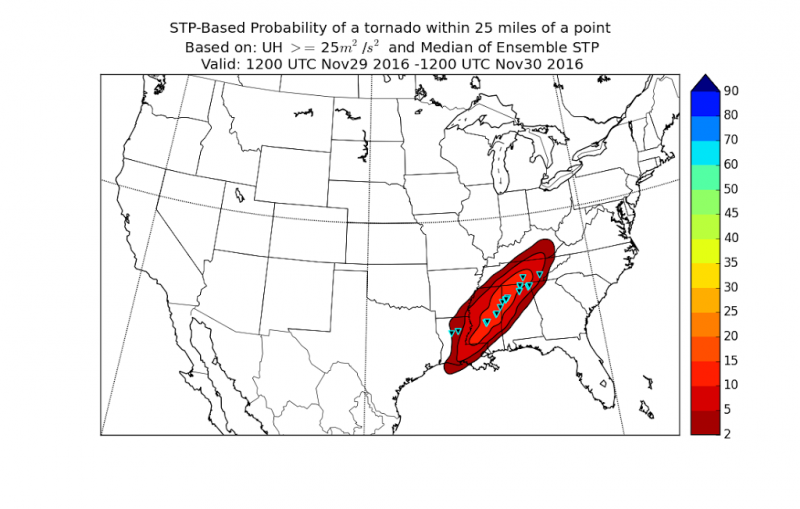

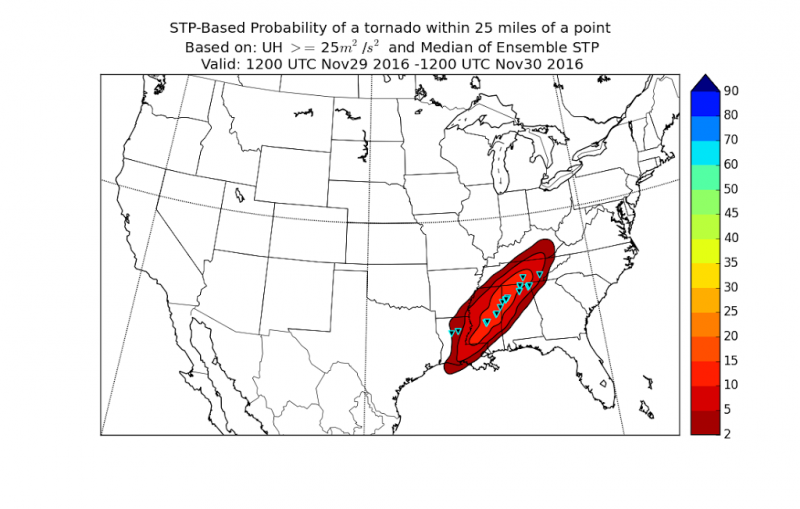

Let’s compare this to the STP-based probabilities:

These probabilities have a much lower magnitude, but still encompass most of the tornado reports within the 10% contour. The 2% contour is also extended westward into Louisiana, capturing the tornado report that the prior probabilities missed. Overall, this forecast is more like the SPC’s outlook, and better reflects what happened on the 29th.

Will we see the same trends into the spring? Aggregated seasonal statistics from spring 2014-2015 seem to suggest yes. However, the opportunity to get participant reflection and evaluation on these probabilities and this methodology awaits – and I, for one, am excited to see what new insights our participants will bring.