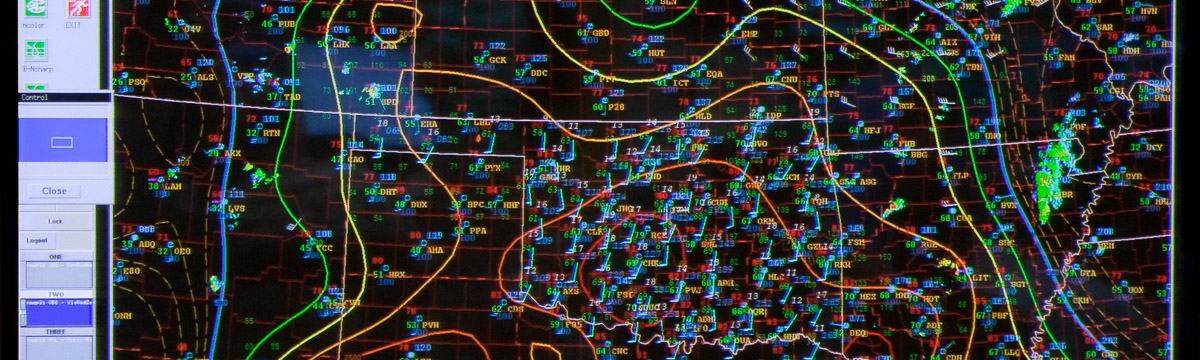

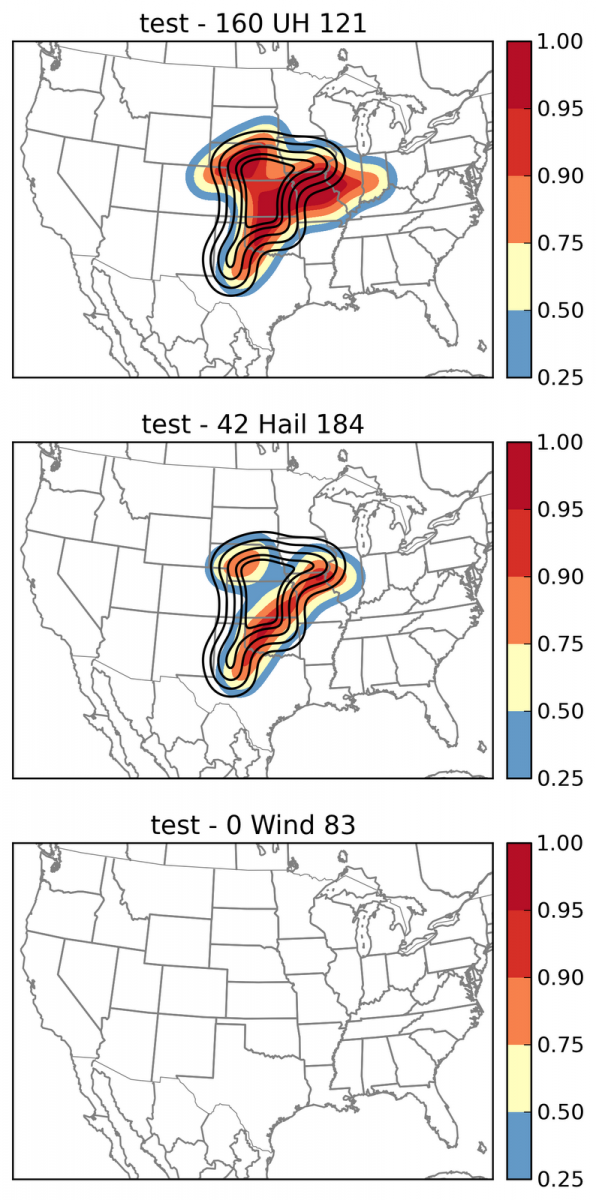

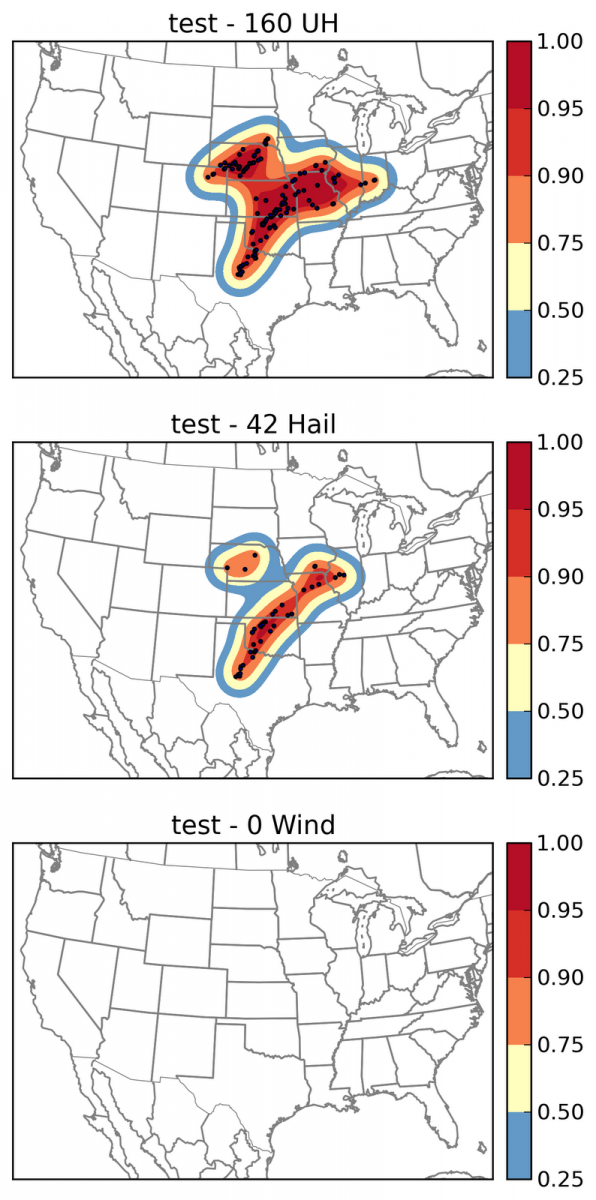

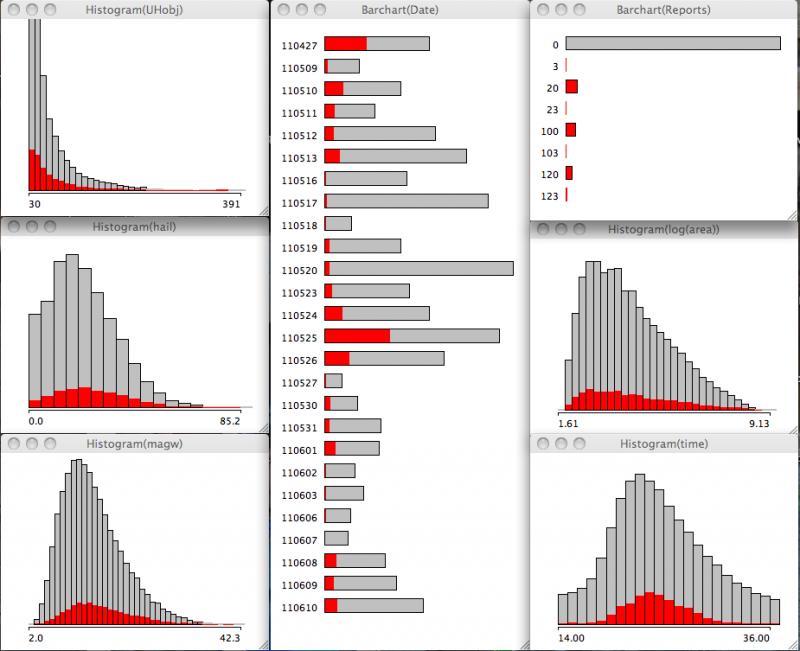

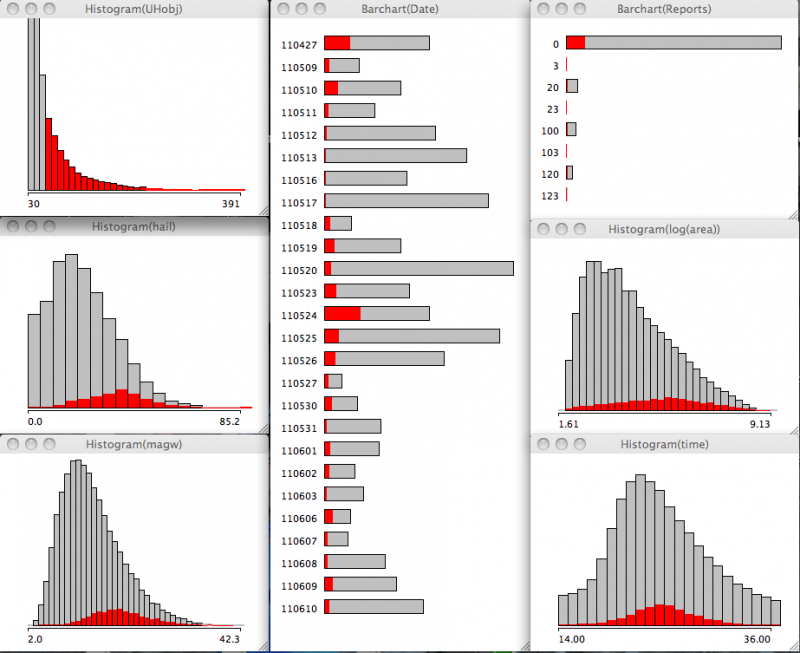

I am debugging and modifying code to complement some of our data assimilation (DA) evaluations. In recent years efforts have been made to provide higher temporal resolution of composite reflectivity. I wanted to take a more statistical visualization approach to these evaluations. One way to do that is to data mine using object based methods, in this case storm objects. I developed an algorithm to identify storms using composite reflectivity using a double area double threshold method using the typical spread-growth approach. The higher temporal resolution of 15 minutes is good enough to identify what is going on in the beginning of the simulations when one model has DA and the other does not; everything else is held constant.

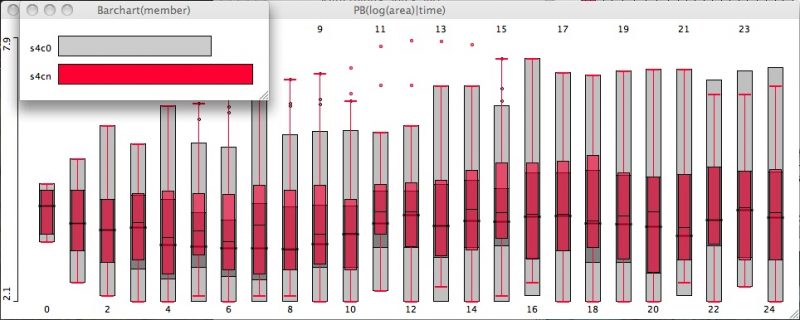

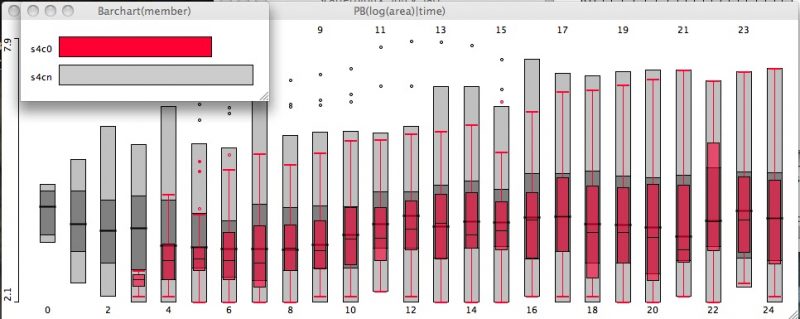

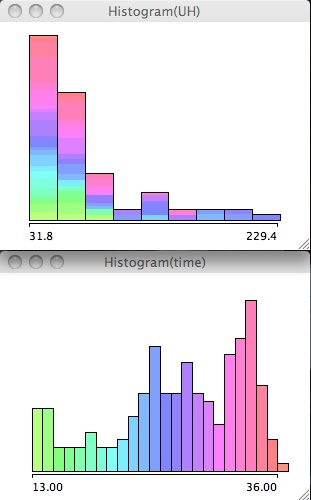

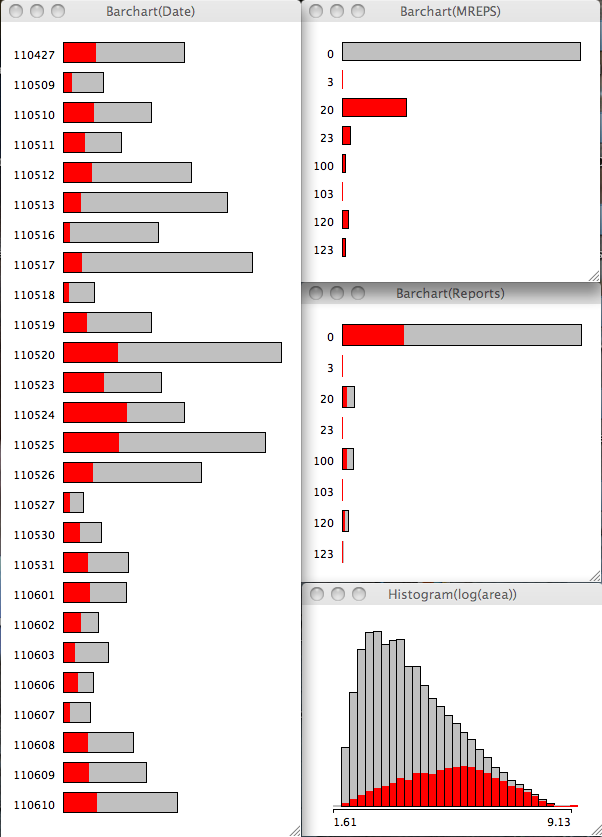

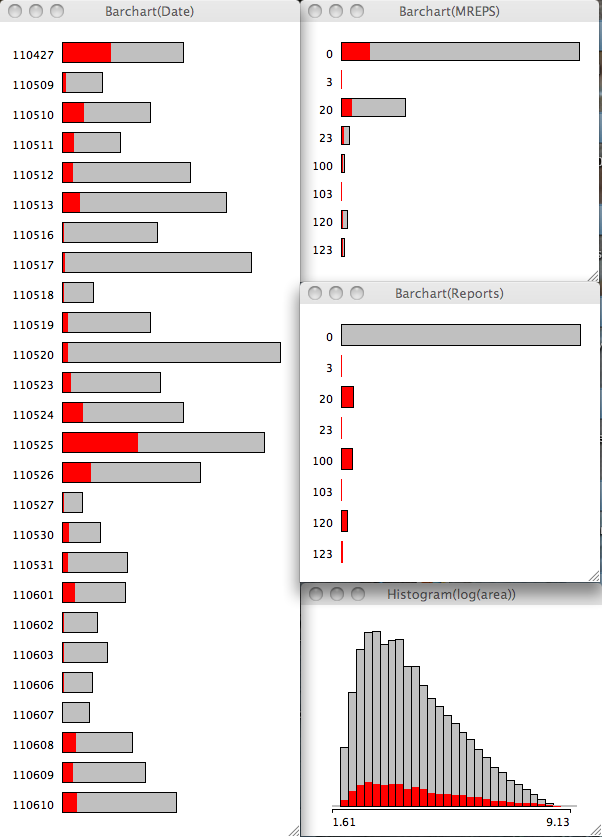

Among the variables extracted are maximum composite reflectivity, maximum 1km reflectivity, and pixel count for each object at every 15 minute output time. In order to make the upcoming plot more readable I have taken the natural log of the pixel count (so a value around 4 equates to 54 pixels, roughly speaking). The plot is a conditional box plot of ln(pixel count) by model time step with 0 being 0000 UTC and 24 being 0600 UTC. I have used a technique called linked highlighting to show the model run using data assimilation in an overlay (top). Note that the model without DA does not initiate storms until 45 minutes into the simulation (bottom). The take away point here being the scale at which storms are assimilated for this one case (over much of the model domain) at the start time is a median of 4.2 (or roughly > 54 pixels) while when the run without DA initiate storms they are on the low end with a median of 2.6 (13 pixels).

This is one aspect we will be able to explore next week. Once things are working well, we can analyze the skill scores from this object based approach.