Going into HWT today, I was thinking about and hoping for a straightforward (e.g. easy) forecast for storms. I was hoping for one clean slate area. An area where previous storms would not be an issue, where storms would take their time forming, and where the storms that do form would be at least partially predicted by the suite of model guidance we have at our disposal. Last time I think that.

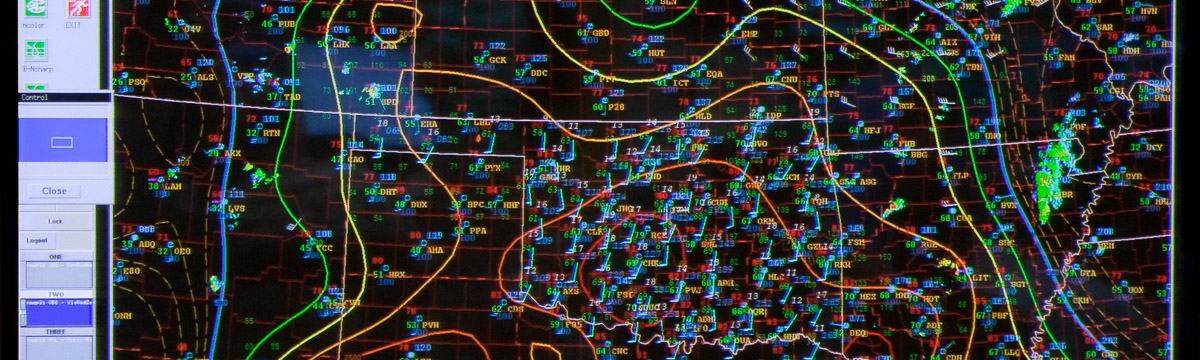

The issues for today were not particularly difficult, just complex. The ensemble that we work with was doing its job, but the relatively weak forcing for ascent in an unstable environment was leading to convection initiation early and often. The resulting convection produced outflow boundaries that triggered more convection. This area of convection was across NM, CO, KS, and NE. It became difficult to rely on these forecasts because of all of this convection in order to make subsequent forecasts of what might occur this evening in NE/SD/IA along the presumed location of a warm front.

We ended up trying to sum up not only the persistent signals from the ensemble, but also every single deterministic model we could get our hands on. We even used all the 12 UTC NAM, 15 UTC SREF, RUC, HRRR, NASA WRF, NSSL WRF, NCAR WRF, etc. We could find significant differences with observations from all of these forecast models (not exactly a rare occurrence) which justified putting little weight on the details and attempting to figure out, via pattern recognition, what could happen. We were not very confident in the end, knowing that no matter what we forecast or when, we were destined to bust.

Ensemble wise, they did their job in providing spread, but it was still somehow not enough. Perhaps it was not the right kind or the right amount of spread. We will find out tomorrow how well (or poorly) we did on this quite challenging forecast. In the end though, we had so much data to digest and process, that the information we were trying to extract became muddied. Without clear signals from the ensemble, how does a forecaster extract the information and process that into a scenario? Furthermore, how can the forecaster apply that scenario to the current observations to assess if that scenario is plausible?

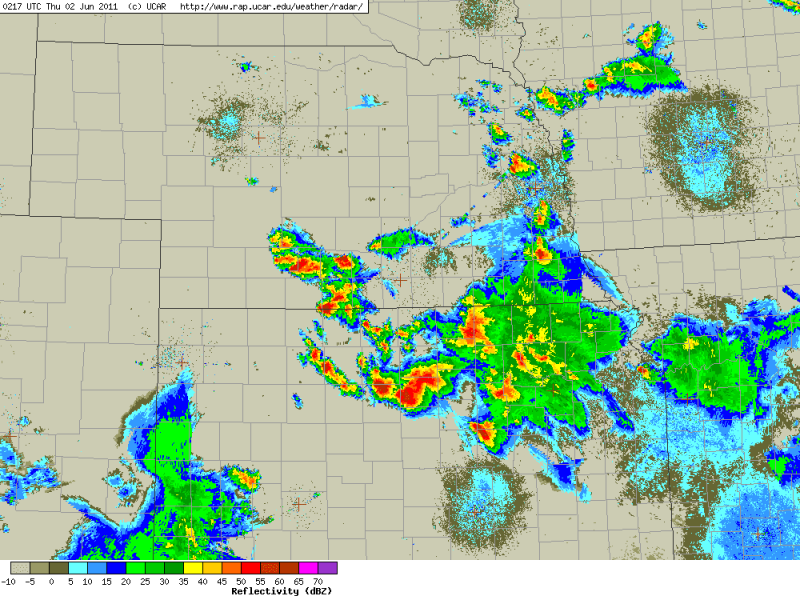

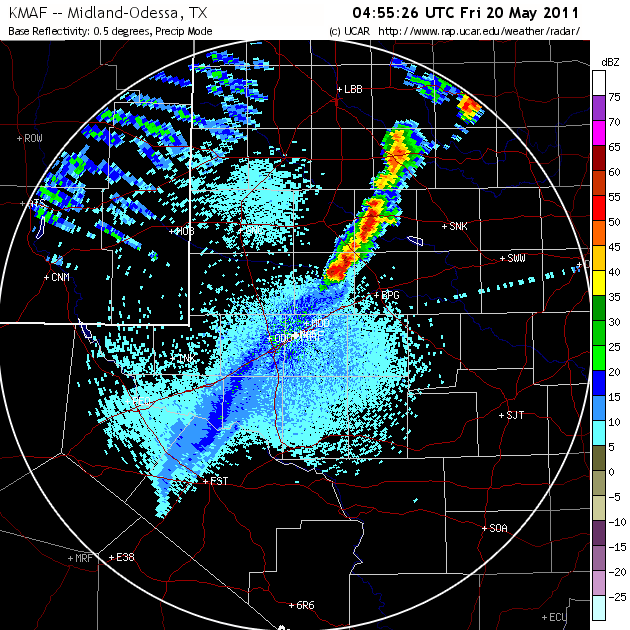

I will leave you with the current radar and ask quite simply: What will the radar look like in 3 hours?

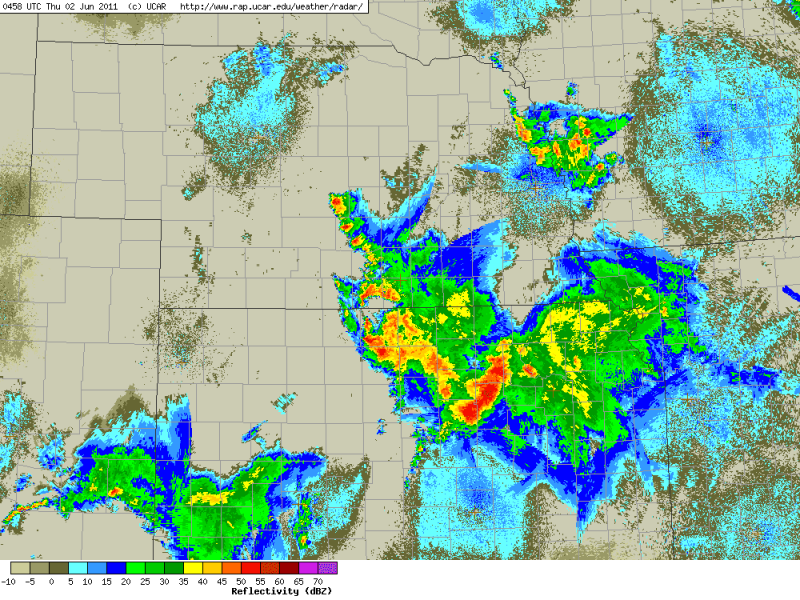

UPDATE: Here is what the radar looked like 3 hours later:

Nothing like our forecast for new storms. But that is the challenge when you are making forecasts like these.