The fine resolution guidance we are analyzing can get the forecast wrong yet probabilistically verify. It may seem strange but the models do not have to be perfect, they just have to be smooth enough (tuned, bias corrected) to be reliable. The smoothing is done on purpose to account for the fact that the discretized equations can not resolve more than 5-7 times the grid spacing. It is also done because the models have little skill below 10-14 times the grid spacing. As has been explained to me, this is approximately the scale at which the forecasts become statistically reliable. An example forecast of a 10 percent probability, in the reliable sense, will verify 10 percent of the time.

This makes competing with the model tough unless we have skill at deriving not only similar probabilities, but placing those probabilities in close proximity in space-time relative to observations. Re-wording this statement: Draw the radar at forecast hour X probabilistically. If you draw those probabilities to cover a large area you wont necessarily verify. But if you know the number of storms, their intensity, their longevity, and place them close to what was observed you can verify as well as the models. Which means, humans can be just as wrong but still verify their forecast well.

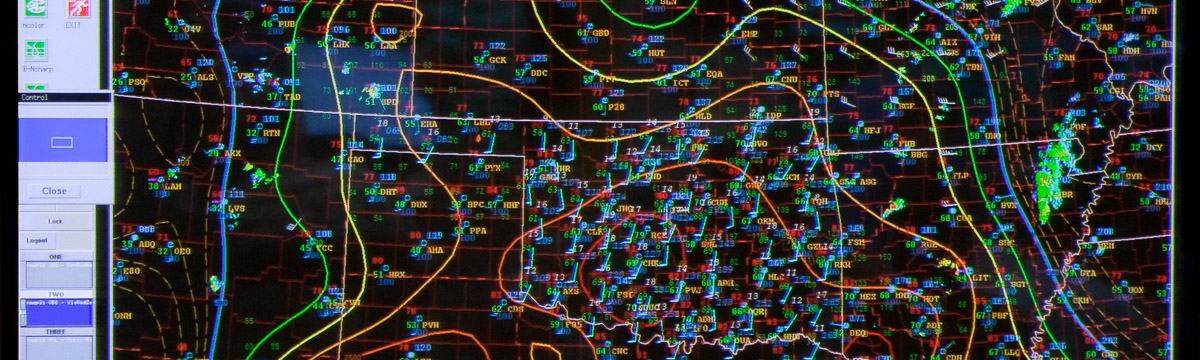

Let us think through drawing the radar. This is exactly what we are trying to do, in a limited sense, in the HWT for the Convection Initiation and Severe Storms Desks over 3 hour periods. The trick is the 3 hour period over which the models and forecasters can effectively smooth their forecasts. We isolate the areas of interest, and try to use the best forecast guidance to come up with a mental model of what is possible and probable. We try to add detail to that area by increasing the probabilities in some areas and removing some for other areas. But we still feel we are ignoring certain details. In CI, we feel like we should be trying to capture episodes. An episode is where CI occurs in close proximity to other CI in a certain time frame presumable because of a similar physical mechanism.

By doing this we are essentially trying to provide context and perspective but also a sense of understanding and anticipation. By knowing the mechanism we hope to either look for that mechanism or symptoms of that mechanism in observations in the hopes of anticipating CI. We also hope to be able to identify failure modes.

In speaking with forecasters for the last few weeks, there is a general feeling that it is very difficult to both accept and reject the model guidance. The models don’t have to be perfect in individual fields (correct values or low RMS error) but rather just need to be relatively correct (errors can cancel). How can we realistically predict model success or model failure? Can we predict when forecasters will get this assessment incorrect?