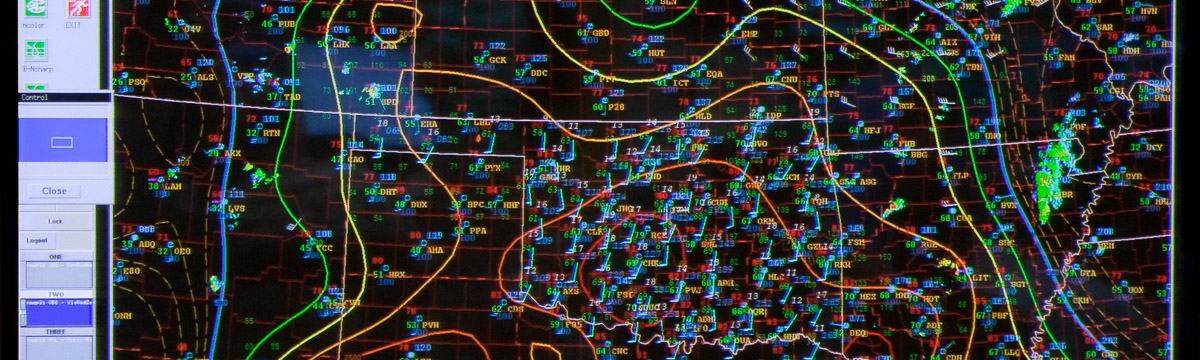

As jimmyc touched on in his last post, one of the struggles facing the Hazardous Weather Testbed is how to visualize the incredibly large datasets that are being generated. With well over 60 model runs available to HWT Experimental Forecast Program participants, the ability to synthesize large volumes of data very quickly is a must. Historically we have utilized a meteorological visualization package known as NAWIPS, which is the same software that the Storm Prediction Center uses for their operations. Unfortunately, NAWIPS was not designed with the idea it would be handling the large datasets that are currently being generated.

To help mitigate this, we utilized the Internet as much as possible. One webpage that I put together is a highly dynamical, CI forecast and observations webpage. This webpage allowed users to create 3, 4, 6, or 9 panel plots, with CI probabilities of any of 28 ensemble members, NSSL-WRF, or observations. Furthermore, users had the ability to overlay the raw CI points from any of the ensemble members, NSSL-WRF, or observations to see how the points contributed to the underlying probabilities. We even enabled it so that users could overlay the human forecasts to see how it compared to any of the numerical guidance or observations. This webpage turned out to be a huge hit with visitors, not only because it allowed for quick visualization of a large amount of data, but because it also allowed visitors to interrogate the ensemble from anywhere — not just in the HWT.

One of the things we could do with this website is evaluate the performance of individual members of the ensemble. We could also evaluate how varying the PBL schemes affected the probabilities of CI. Again, the website is a great way to sift through a large amount of data in a relatively short amount of time.