The Warn-on-Forecast System is a research project at the NOAA National Severe Storms Laboratory that aims to increase lead time for tornado, severe thunderstorm and flash flood warnings. Since its inception in 2009, researchers have been hard at work developing and improving it. The Warn-on-Forecast System is not just one application, but many applications and components that all work together to give users the final model output. The usefulness and success of Warn-on-Forecast has been well documented already, but for a quick overview, check out the Case Studies page to see how WoF has provided advanced notice of extreme weather events, including tornadoes, large hail, straight-line winds and flash flooding. Every year since 2017, Warn-on-Forecast has been tested in real-time during multiple spring and summer high-impact weather events, providing valuable data to local NOAA National Weather Service forecast offices and researchers at NSSL and the Cooperative Institute for Severe and High-Impact Weather Research and Operations (CIWRO).

The Warn-on-Forecast System and its workflow, which includes the Numerical Weather Prediction (NWP) model, an Ensemble Kalman Filter (EnKF) for data assimilation and all associated applications, post-processing applications, web server and web application were all developed on NSSL’s local High Performance Computing (HPC) system. The success and stability the local infrastructure provided was paramount to the early development and testing of Warn-on-Forecast. With the introduction and widespread availability of cloud based services, researchers began to investigate the feasibility of running WoF in the cloud, and in the summer of 2020, development began on a new, cloud-based Warn-on-Forecast System. That work was completed early this year and WoF is now operating in the cloud.

The first step in migrating Warn-on-Forecast to the cloud was to streamline some of the development processes by developing a Continuous Integration, Continuous Deployment (CI/CD) pipeline. The time invested in developing a proper CI/CD pipeline early on in an application’s development is well worth the effort, saving time from constantly having to rebuild, test and manage releases. It is essential and will set the foundation from which projects will grow. Azure DevOps was selected for its existing integration with Azure Active Directory, seamless integration with GitHub and many built-in project planning features. With Azure DevOps in place, we were able to develop automatic pipelines for building and deploying every individual piece of the system: the numerical weather model, post processing, web application, etc. Code changes made to any one piece of the system will now automatically get built, versioned, stored and deployed. This enables NSSL to quickly test changes and keep close track of which features are included with each model run, down to the individual changeset on GitHub!

With a CI/CD workflow in place, the next step was to begin building the entire infrastructure on the cloud. This required us to examine our existing workflow and identify what could be used directly in the cloud and what needed to be updated to take full advantage of what the cloud has to offer. One of the requirements of this project is to (eventually) pass this entire application over to forecast operations, so having a way to rebuild this project on another cloud environment is essential. To meet these requirements, Terraform was used to describe the entire Warn-on-Forecast infrastructure (including the CI/CD). Terraform is a very powerful tool that allows the user to express infrastructure as code. With the entire Cb-WoFS infrastructure expressed as human-readable code, NSSL can have its infrastructure code managed and source-controlled in GitHub just as the WoFS application code, providing a single location for both the infrastructure and application. This is what will give NSSL the ability to quickly re-create the entire Cb-WoFS infrastructure, whether it’s a new, internal environment or a completely new cloud subscription. Terraform is also cloud agnostic, providing plugins for all the major cloud service providers.

With our CI/CD pipelines in place, along with Terraform to describe which cloud services to create and how those services must interconnect, we then had to containerize WoFS and all its dependencies. WoFS, which includes customized versions of WRF, GSI, EnKF and other applications, had been compiled and executed on NSSL’s internal HPC Cray environment. To make WoFS more portable, these applications were updated and containerized into a single Singularity container that could be executed on almost any HPC environment, including the cloud! With a newly containerized WoFS, NSSL can “drop” the containerized version of WoFS onto any number of nodes and execute as before, without the need to install dependencies or recompile anything.

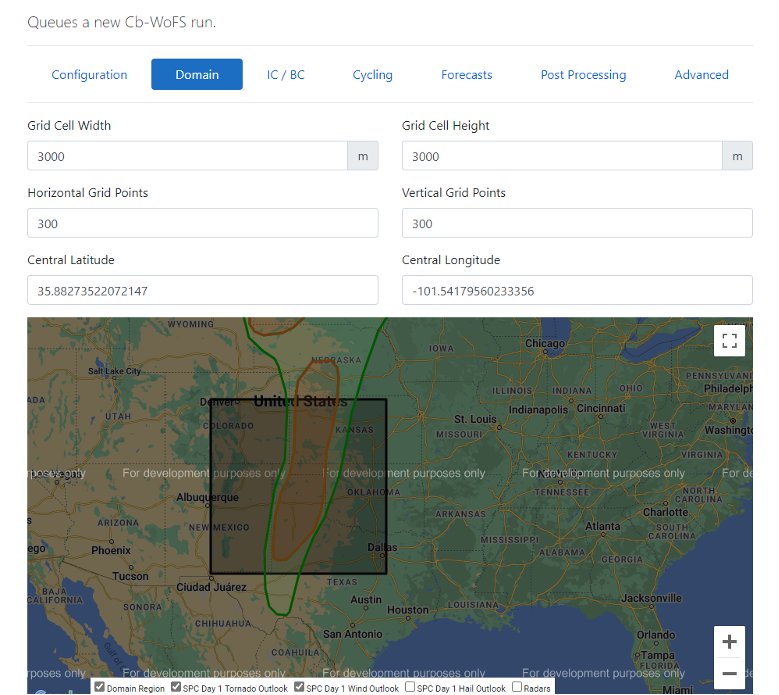

Orchestration was the next step in the migration process. The goal was to make creating a WoFS run as simple as possible so anyone could start it. A new, database-driven web application was built for the cloud that provides administrators the ability to configure and launch a new NWP run, and also view post-processed results using the same web viewer previously developed for the on-premise version of WoFS. The web application utilizes a newly created object framework that describes the WoFS workflow, including the tasks required to run cycling, forecasting and post-processing. An example of the user interface developed for queuing a new model run can be seen below.

Finally, post-processing was migrated into a python package that could be built using the DevOps pipeline and automatically deployed to an internal PyPI repository. From there, the DevOps process builds a new Docker container that is customized to process the raw forecasting output from the NWP model and generate web graphics for the web viewer. With the post-processing workflow updated to use highly efficient queues for forecast processing, as well as being packaged within a Docker container, the entire post-processing application is decoupled from the NWP application and scalable to as many nodes as needed to work through the queue. This will enable NSSL to easily configure and process multiple WoFS domains simultaneously.

What does all this mean? What are the benefits? With WoFS now on the cloud, the WoFS application is one step closer to being completely self-contained. A new cloud environment can be created and destroyed in only a matter of minutes. In addition, individual WoFS components are modular and scalable, which will enable NSSL to run multiple domains simultaneously in the very near future. There is now just a single web app for starting, monitoring, and viewing model runs, greatly simplifying the process through an easy-to-use user interface and providing the ability to start a WoFS run without requiring a background in HPC. With WoFS on the cloud, NSSL does not have to pay for any unused resources. When a WoFS run is started, the HPC environment is built and begins processing in less than 10 minutes. Upon completion, the entire environment is torn down, after which the only cost associated with the model run is data storage (and that’s a topic for another post!). Finally, another major advantage to WoFS on the cloud is the ability to use new processors as they are offered by cloud providers. For example, Azure recently upgraded all AMD EPYC Milan processors to Milan-X, which immediately boosted Cb-WoFS performance without any additional cost.

Although most of the application is on the cloud, there are still a few WoFS-related components that remain on-premise, such as the pre-processing that occurs with observation data. Once these final pieces are migrated to the cloud, Cb-WoFS will be completely self-contained and deployable to any cloud subscription.